This is a set of online notes that I plan to grow into a complete book. The topic is physical layer design and the goal is to provide a useful starting point for engineers and students. These notes will be terse to start, but improve over time. If you find them useful, please email some feedback to me. I will do my best to incorporate your suggestions.

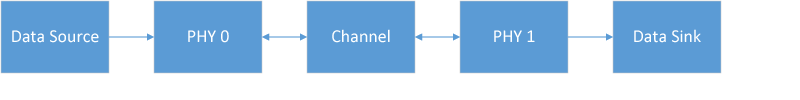

The physical layer is the bottom of the protocol stack in any data or communication network. It sends bits over the channel, which is typically wired (i.e. coaxial cable, ethernet cable, twisted pair) or wireless (i.e. indoor, outdoor, deep space). The usual interface at the top of the physical layer is bytes of data in and out. In practice there are often many control signals as well. And, channel state information (i.e. frequency response, signal-to-noise ratio) may be shared with the upper layers.

Figure 1.1

The interface at the bottom of the physical layer may be an antenna or antenna connector for a wireless system, or a wired connector (such as an RJ45 for Ethernet). The analog electrical signal carries the data bytes from the top over the particular medium. Modern wireless systems use radio transceivers on either licensed spectrum (e.g. LTE) or unlicensed spectrum (e.g. IEEE 802.11). Wired channels are typically a type of twisted pair or coaxial cable. Channels that employ optical techniques, either optical fiber or free space light waves are not discussed in detail here. However, the same techniques may apply in some cases.

A good physical layer design provides reliable communication over the channel, so the network layers can function. Typically, this involves three common elements. First, there is a known modulation format that transmitters use to map data bits to electrical signals and receivers use to convert the electrical signals back to data bits. The modulation format can incorporate redundancy, such as coding, to reduce errors in transmission.

Second, there are known signals or sequences that are used to synchronize the transmitter to the receiver and allow the receiver to estimate the channel state. Sometimes the receiver shares the channel state information with the transmitter so the transmitter can optimize its choice of signals.

The third element is control signaling between the two physical layers. This includes marking the start and end of transmissions, signaling the particular modulation format, etc. Control signaling addresses many practical factors that are necessary to make a communications network operate.

A variety of mathematic techniques are used in the development and implementation of physical layers. It would be impossible to be an expert in all of them. Instead, I turn to a set of references when I have a new of difficult problem to solve. I list several of my favorites references in this chapter.

This chapter also introduces the notation used throughout this book. Consistency in notation is helpful in avoiding ambiguity which inevitably results in error. And, avoiding error is what communication is all about.

Mathematic techniques provide both intuitive insight and practical solutions for the specific problems of physical layer design. I try to balance insight and practice, but in some cases the solutions are long and complicated and so for these cases I will just provide solutions. Once again, it is necessary to explore the references in order to gain a thorough understanding of the solution.

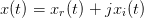

We start with the most general signal which is a complex function of time that can be decomposed into real and imaginary components.

| (2.1) |

The convention is that all signals, variables, functions, vectors, and matrices are complex unless noted as real.

Discrete time signals, sampled at a uniform interval T, are written as functions of a sample n:

| (2.2) |

A column vector is often used to represent a discrete time signal of finite length:

![⌊ ⌋

x(k − 1)

T ||x(k − 2)||

x = [x(k − 1) x(k − 2) ...x(0)] = |⌈ .. |⌉

.

x(0)](book_r03x.png) | (2.3) |

Lower case is typically used for time domain signals and vectors. Upper case is used for frequency domain signals and matrices with more than one column.

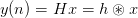

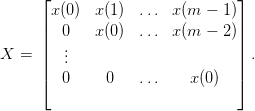

For example, a circulant matrix [HJ85] is useful for demonstrating a circular convolution using vectors:

| (2.4) |

and

| (2.5) |

where x and h are vectors of length k.

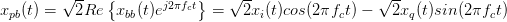

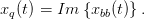

Signals on or around DC (direct current, or zero frequency) are called baseband signals while signals that are around a higher frequency carrier are passband signals. In general the baseband signal is complex, while the passband signal is real. It is common to shift a baseband signal, xbb, to passband, xpb, and vice versa. The relationships are defined as in [BLM04] and apply to both continuous and discrete-time (with some restrictions) signals:

| (2.6) |

where the common I (in-phase) and Q (quadrature) signals are just the real and imaginary parts:

| (2.7) |

and:

| (2.8) |

The  factor is included so that the norms of the baseband and passband signals

are the same.

factor is included so that the norms of the baseband and passband signals

are the same.

As we will see in later sections, most physical layer processing is done on complex representations of baseband signals. Usually, this is the most convenient way to do things.

Much of the machinery of any communication physical layer comes down to manipulation of finite length discrete time signals which are represented with complex vectors. Thus, it is incredibly useful to be comfortable with linear algebra and matrix techniques such as those described in [HJ85]. A few key points are provided here.

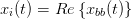

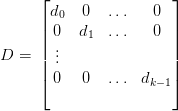

Special matrices are useful as operators when multiplied by vectors that represent signals. We start with the most common.

The diagonal matrix,

| (2.9) |

is a square matrix that will scale each element of a vector. That is, for

x = ![[x0 x1 ...xk−1]](book_r011x.png) T ,

T ,

![Dx = [d0x0 d1x1 ... dk−1xk−1]T](book_r012x.png) | (2.10) |

This most common diagonal matrix is the identity matrix, I, in which all diagonal elements are 1. Mulitplication by the identity matrix does not change the original matrix or vector:

| (2.11) |

and

| (2.12) |

I wrap up the this chapter with a quick summary of a set of concepts and techniques that are primary used in error correcting codes.

The most common field that we use every day is GF(2) (Galois Field of order 2) or binary logic. A field is closed under two operations. For binary logic, these are AND and XOR.

The key problem in communication, in its most abstract form, is information from a transmitter, described by x, that is observed by a receiver as y. We assume x and y are random variables (or vectors of random variables) for which probability distributions are defined. From y, the receiver will attempt to do the best it can to determine x.

The mutual information between x and y is defined as:

![I(x;y) = log2P-[x|y]= I(y;x) = log2P-[y-|x-]= log2-P-[x,y]-

P [x] P [y ] P [x]P[y]](book_r015x.png) | (3.1) |

where the base 2 is employed in the logarithm so that the units are bits.

Typically, the transmitter will employ a discrete alphabet for signaling, but the signal will be corrupted by noise in transmission. In many systems, the noise will be additive, white, and Gaussian. The resulting channel has the form:

| (3.2) |

where:

| (3.3) |

and N is zero mean with variance σ2. X is a discrete random variable with uniform density:

| (3.4) |

and the density of Y given X is a Gaussian:

| (3.5) |

.

The capacity of the channel is defined as the maximum possible mutual information over all input densities:

| (3.6) |

The capacity is the highest rate at which error free transmission is possible over a channel. This is a topic that goes well beyond the scope of these notes. A good place to learn more is in [CT91]. Channel capacities are purely theoretical results, but, as we shall see later, they are immensely useful in understanding how well a practical system is operating.

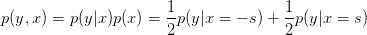

Let’s take a look at the capacity of a binary input AWGN channel. Binary phase shift keying (BPSK) with no intersymbol interference has this characteristic. We have two input symbols, −s and s. We first find

| (3.7) |

and so we can take the sum over the two values of X to determine the density of Y :

| (3.8) |

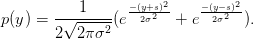

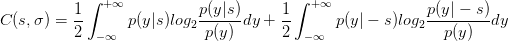

Then the capacity can be written as:

| (3.9) |

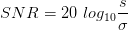

There is no closed form solution. However, the solution can be found by numerical integration. The signal to noise ratio in decibels will be:

| (3.10) |

and the capacity is shown in the figure below.

Figure 3.1

Mutual information and channel capacity set bounds on what is possible, but they don’t provide sufficient insight into how to reach that goal. Additional insight, as well as actual techniques, come from detection and estimation theory.

Given our received signal, y, we want to find an estimate of the transmitted message, x:

| (3.11) |

In fact, we want more than that. We want a good estimate, or, if possible an optimal estimate. But what is optimal?

The most basic and useful measure for optimality in communication is to minimize the number of errors we make. This isn’t as simple as it sounds because, as covered later, it might mean bit errors, symbol errors, frame errors, etc. For now, we can define:

![P r(error) = P r[ˆx ⁄= x |y ]](book_r027x.png) | (3.12) |

and, correspondingly:

![P r(correct) = 1 − P r(error ) = Pr [ˆx = x |y].](book_r028x.png) | (3.13) |

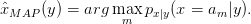

Minimizing the probabality of error is equivalent to finding the decision rule that maximizes the probability of correctly selecting the message that was transmitted given the received signal. The Maximum A posteriori Probability (MAP) decision rule is optimal [Lap09] [Gal08] in that it will minimize the probability of error. We can write the MAP rule as:

| (3.14) |

The rule is to look at the conditional probabilities for different transmitted signals x = am given the received probability density of the received signal y and select the one that is the largest.

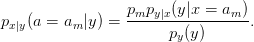

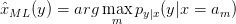

In practice, it is often more common to employ a maximum likelihood (ML) detector. The ML decision metric is easier to compute. It can be derived from the MAP probability by:

| (3.15) |

The ML decision rule is then:

| (3.16) |

Communication problems are framed as estimating discrete random variables and sequences. However, that is only one problem that we need to solve in physical layer design. Real communication systems require estimation of non-random parameters, such as carrier frequency or the channel impulse response. There are many different techniques available, but most are based on least squares estimation or an approximation of least squares.[BF01][GL89]

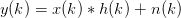

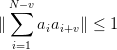

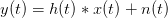

As an example, let’s examine channel impulse response estimation. A known baseband signal x is convolved with an unknown channel, h. The receiver observations are corrupted by noise:

| (3.17) |

The convolution can be expressed as a matrix-vector multiplication with X defined as:

| (3.18) |

The entire equation then takes the form:

| (3.19) |

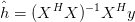

and the least squares estimate of h can be computed:

| (3.20) |

.

Channel coding encompasses a set of techniques than can be used to maximize the error free transmission rate over a channel. This section provides a short background into the theory behind coding, while a later section decribes practical implementations that are used in real physical layers.

Classical coding include algebraic block codes and convolutional codes. These are traditional error correcting codes that have been in common use since the 1960s and are still used today. Modern coding refers to Turbo and Low Density Parity Check (LDPC) codes that can achieve higher performance.

In general, classical coding techniques are more amenable to analytical techniques than modern codes. This makes it easier to select a classical code and predict its performance. Also, classical encoding and decoding techniques are older, which means they are much less likely to be encumbered by intellectual property.

In contrast, it is almost always possible to design a better code with modern techniques and, for this reason, they are used in new high performance systems.

The mathematical theory behind linear block codes is vast and I only scratch the surface here. The standard definition for a linear block code ([LDJC04]) uses a (k,n) generator matrix, G. A length k message, u, is encoded into a length n codeword

| (3.21) |

where v is an n element row vector describing a codeword in a code C,

| (3.22) |

A (n − k,n) parity check matrix, H, is defined from the generator matrix via

| (3.23) |

and all codewords are part of the null space of H:

| (3.24) |

The elements of u, v, G, and H are from a finite field. Often it is GF(2) but other fields, such as GF(2m) used in Reed-Solomon codes. In GF(2), multiplication is the common Boolean “AND” while addition is the Boolean “XOR”.

Since the code is linear, the all zeros vector is always a codeword. Furthermore, the sum of any two codewords is also a codeword.

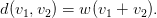

The Hamming weight of a codeword, v is defined as the number of non-zero elements it contains, w(v). The distance between two codewords is defined as the number of elements in which they differ. This can be expressed as:

| (3.25) |

.

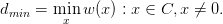

The most important property of a code C is the minimum distance between codewords. This determines how effective the code will be in detecting or correcting errors, dmin. The minimum distance is equal to the non-zero codeword with the smallest weight:

| (3.26) |

Every physical layer design employs known sequences for estimating signal or channel parameters. This is commonly referred to as“receiver training”, i.e. the receiver employs the fact that it knows the sequence to determine a signal property, such as power level, frequency offset, or the channel the signal as passed through. Sequences are chosen for certain qualities that determine how well they perform for estimating parameters or how easy they are to use. Several common sequences are described in this section.

A Barker code is a sequence of binary symbols

| (3.27) |

with the property that the autocorellation at delay greater than zero is always less that 1. That is:

| (3.28) |

There are only 9 known Barker codes and the longest is of length 13. The length 11 Barker code is used as a spreading sequence in the original 1997 IEEE 802.11 standard:

![[ ]

a = 1 1 1 − 1 − 1 − 1 1 − 1 − 1 1 − 1](book_r044x.png) | (3.29) |

Since the Barker sequence is binary, it is extremely simple to implement in both the transmitter as a modulator and in the receiver as matched filter. However, its use is limited because of the residual off zero autocorrelation terms. Figure 3.2

CAZAC (Constant Amplitude Zero Autocorrelation) sequences of arbitrary length

with cyclic autocorrelation of zero for non-zero delay. This property makes them

useful for synchronization and channel estimation. Implementation of these

sequences is more complicated, however, because they are not drawn from a

simple binary alphabet. The amplitude is always one, but the phase angle takes

on values that are drawn from 2π , where N is the sequence length and m is an

integer.

, where N is the sequence length and m is an

integer.

Even length CAZAC sequences can be generated with:

| (3.30) |

where N is the sequence length and M is an integer relatively prime to N. This type of sequence is often called a Zadoff-Chu sequence after [Chu72].

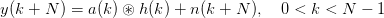

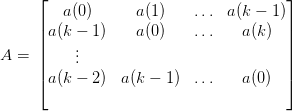

A PHY layer training field can be constructed for simplified channel estimation with a repeated CAZAC sequence. For example, the traing field:

![xtr(k) = [a0a1...aN a0a1...aN ], 0 < k < 2N − 1](book_r048x.png) | (3.31) |

passes through a linear channel h(k) and is corrupted by noise sequence n(k). Since the training sequence is repeated, the receiver observes a circular convolution of the CAZAC sequence and the channel as the second half of the training sequence is received:

| (3.32) |

| (3.33) |

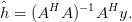

The matched filter for a(k), i.e. a∗(k), applied to y(k), will yield a least squares estimator for h(k). This can be demonstrated by constructing the circulant matrix from a(k), i.e.

| (3.34) |

and noting that the least-square estimate of h(k) is as shown in 3.20:

| (3.35) |

However, for any CAZAC sequence,

| (3.36) |

so

| (3.37) |

Thus, the estimator complexity is reduced from a matrix multiplication to a linear filter.

LTE systems employ CAZAC (Zadoff-Chu) sequences for primary synchronization of the user equipment (UE) from the basestation.[Ahm14]

Complementary sequences provide many of the benefits of CAZAC sequences but employ binary alphabets like Barker Codes. To do this, the sequences must be used in pairs that provide good autocorrelation properties. The most common are those described by Golay [Gol61].

A pair of length n binary sequences, a and b, with ai ∈{−1, 1} and bi ∈{−1, 1}, are complementary if:

| (3.38) |

Golay sequences are employed in the phy preamble IEEE 802.11ad standard to facilitate low complexity synchronization and channel estimation at low signal-to-noise ratios.

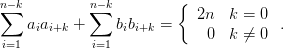

| (4.1) |

where y(t) is the received signal, x(t) is the transmitted signal, h(t) is the

frequency selective channel, and n(t) is the Gaussian noise signal. Since the noise

is typically introduced at the receiver front end, it does not experience the effects

of the channel and can often be assumed to be white. White noise has

the property of a flat spectrum, i.e. equal power per unit bandwidth.

Perfectly white noise cannot exist, because it would have infinite power.

However, it is a useful approximation to assume the noise is white over

the band of interest. Typically, this would be over the Nyquist interval,

(− ,

, ).

).

The simplest channel is one in which the frequency response is flat, i.e. h(t) = δ(t) and the noise is white. This is the AWGN channel mentioned in 3.1.

Frequency selectivity occurs naturally in channels and is designed into transmitters and receivers. Even if the channel itself is flat (i.e. a free space radio channel), the frequency response of components in the transmitter and receiver will create a frequency selective channel.

Figure 4.1

[80216] IEEE Standard for Information technology Part 11: Wireless LAN Medium Access Control (MAC) and Physical Layer (PHY) Specifications. IEEE Std. 802.11, 2016.

[Ahm14] Sassan Ahmadi. LTE-Advanced: A Practical Systems Approach to Understanding the 3GPP LTE Release 10 and 11 Radio Access Technologies. Academic Press, 2014.

[BDMS91] Ezio Biglieri, Dariush Divsalar, Peter J. McLane, and Marvin Simon. Introduction to Trellis-Coded Modulation with Applications. Macmillan, 1991.

[BF01] Richard L. Burden and J. Douglas Faires. Numerical Analysis, 7th Ed. Brooks Cole, 2001.

[BLM04] John R. Barry, Edward A. Lee, and David G. Messerschmitt. Digital Communication, 3rd Ed. Springer, 2004.

[CB89] R. V. Churchill and J. W. Brown. Complex Variables and Applications, 5th Ed. McGraw-Hill, 1989.

[Che82] E. W. Cheney. Approximation Theory, 2nd Edition. American Mathematical Society, 1982.

[Chu72] David C. Chu. Polyphase codes with good periodic correlation properties. IEEE Transactions on Information Theory, Vol. 18, No. 4, July 1972.

[CT91] Thomas M. Cover and Joy A. Thomas. Elements of Information Theory. John Wiley and Sons, 1991.

[CTB98] G. Caire, Girogio Taricco, and Ezio Biglieri. Bit-interleaved coded modulation. IEEE Transactions on Information Theory, Vol. 44, No. 3, May 1998.

[Gal08] Robert G. Gallager. Principles of Digital Communication. Cambridge University Press, 2008.

[Gar79] Floyd M. Gardner. Phaselock Techniques. John Wiley and Sons, 1979.

[GL89] Gene H. Golub and Charles F. Van Loan. Matrix Computations, 2nd Ed. Johns Hopkins University Press, 1989.

[Gol61] Marcel J. E. Golay. Complementary series. IRE Transactions on Information Theory, Vol. 7, No. 2, April 1961.

[Han98] Per Christian Hansen. Rank-Deficient and Discrete Ill-Posed Problems. SIAM Series on Mathematical Modeling and Computation, 1998.

[HJ85] Roger A. Horn and Charles R. Johnson. Matrix Analysis. Cambridge University Press, 1985.

[HJ91] Roger A. Horn and Charles R. Johnson. Topics in Matrix Analysis. Cambridge University Press, 1991.

[HW99] Chris Heegard and Stephen B. Wicker. Turbo Coding. Kluwer Academic Publishers, 1999.

[Kay93] Steven M. Kay. Fundamentals of Statistical Signal Processsing, Vol. 1: Estimation Theory. Prentice Hall, 1993.

[Lap09] Amos Lapidoth. A Foundation in Digital Communication. Cambridge University Press, 2009.

[LDJC04] Shu Lin and Jr. Daniel J. Costello. Error Control Coding, 2nd Ed. Pearson Prentice Hall, 2004.

[Moo05] Todd K. Moon. Error Correction Coding: Mathematical Methods and Algorithms. John Wiley and Sons, 2005.

[Nee97] Tristan Needham. Visual Complex Analysis. Oxford University Press, 1997.

[Ort90] James M. Ortega. Numerical Analysis: A Second Course. Society for Industrial and Applied Mathematics, 1990.

[OS99] Alan V. Oppenheim and Ronald W. Schafer. Discrete-Time Signal Processing, 2nd Ed. Prentice Hall, 1999.

[Pap84] Athanasios Papoulis. Probability, Random Variables, and Stochastic Processes, 2nd Edition. McGraw-Hill, 1984.

[PS09] John G. Proakis and Masoud Salehi. Digital Communication, 5th Ed. McGraw-Hill, 2009.

[RL09] William E. Ryan and Shu Lin. Channel Codes, Classical and Modern. Cambridge University Press, 2009.

[RU08] Tom Richardson and Rudiger Urbanke. Modern Coding Theory. Cambridge University Press, 2008.

[Rud76] Walter Rudin. Principles of Mathematical Analysis, 3rd Ed. McGraw-Hill, 1976.

[Sal73] J. Salz. Optimum mean-square decision feedback equalization. The Bell System Technical Journal, Vol. 52, No. 8, October 1973.

[Str16] Gilbert Strang. Introduction to Linear Algebra, 5th Edition. Wellesley-Cambridge Press, 2016.

[Tre68] Harry L. Van Trees. Detection, Estimation, and Modulation Theory, Part 1. John Wiley and Sons, 1968.

[TV05] David Tse and Pramod Viswanath. Fundamentals of Wireless Communication. Cambridge University Press, 2005.

[VKG14] Martin Vetterli, Jelena Kovacevic, and Vivek K. Goyal. Foundations of Signal Processing. Cambridge University Press, 2014.